In addition to building a few large models, AI companies and anyone else working with machine learning can build many smaller models to handle unique problems. These micromodels are capable of doing more, and more precisely, with less: less data, less time, and less expense.

This article explains how Lexalytics, an InMoment company, extends our “Tune First, Then Train” approach into machine learning and artificial intelligence. Full disclosure: we come from the perspective of natural language processing and text analytics, but the philosophy outlined here is broadly applicable.

Key take-aways:

Our efforts have shown that building numerous micromodels is simpler and more efficient than building a large macromodel in certain cases

Micromodels reduce the challenge of sourcing and annotating large amounts of data

The micromodel approach is valuable for any AI or machine learning company, or anyone building machine learning systems

More Data is Not Always Better: AI and Machine Learning Companies Should Be Wary of Unanticipated Side-Effects

Machine learning and artificial intelligence companies love data. Many data scientists still work on the outdated premise that more data is always better. Unsurprisingly, this creates an environment where the solution to any machine learning problem is to throw more data at it.

This can be a viable approach, but it’s not always the best one. Gathering, cleaning and annotating data is time-consuming and expensive. Sometimes, you simply won’t have enough data to work with.

On a more sinister note, training on ever-larger data sets can lead to unanticipated side-effects. In these cases, inconsequential but ubiquitous items are assigned weight or meaning by the algorithm just because they show up a lot in the data.

As this article on Recode put it back in 2017:

“Analytics is hard, and there’s no guarantee that analyzing huge chunks of data is going to translate into meaningful insights.”

The result of “more data is always better”? Biased algorithms that directly harm people’s health and well-being; underwhelming AI implementations that disillusion management teams, and catastrophic AI failures that waste countless man-hours and billions of dollars.

In fact, the danger of this approach is why we developed our Tune First, then Train methodology.

Tune First, Then Train: Reduce Overhead by Reducing Machine Learning Usage

Text analytics and natural language processing involve many problems that require supervised learning on annotated or scored text. When your approach is exclusively training-based, things can quickly become unwieldy. Tune First, then Train helps to mitigate these risks.

Under this methodology, you tune (code parameters into) your system before you start training any machine learning models. This approach delimits your outcomes, giving you more precise and efficient results.

Compare this approach to the standard “Train First, then Tune” approach, where you build your models, get your outcomes and then start tweaking those models.

Tune First, then Train is already serving us, and our clients, by improving our ability to deliver precise, accurate analytics even while reducing the time and money required. But even with prior tuning, training machine learning models is still a resource-intensive process. Why? Because under the “just throw data at it” philosophy, you tend to train macromodels – big models to solve big problems.

Tune First, then Train is already serving us, and our clients, by improving our ability to deliver precise, accurate analytics even while reducing the time and money required. But even with prior tuning, training machine learning models is still a resource-intensive process. Why? Because under the “just throw data at it” philosophy, you tend to train macromodels – big models to solve big problems.

Related: The Case for Agile Machine Learning

Macromodels are valuable and useful. But they require significant time and data to ensure the system is capable of handling all the data points you need it to. And while pre-emptive tuning can be used to handle some special cases, it’s not ideal for others.

This is why we’ve extended our Tune First, then Train methodology to embrace something new: micromodels.

Introducing Micromodels: Training as Small as Possible, As Much as Possible

Macromodels learn from and analyze large swaths of data. But why throw more data at the problem when you can throw less with the same or better results? Enter micromodels, the antithesis to “more data is better”.

What are micromodels? Micromodels are machine learning models that focus on very small, very specific pieces of data, such as the part of speech of a single word or recognizing a particular entity.

![[Macromodels-vs-Micromodels.png]](https://www.lexalytics.com/wp-content/uploads/2022/04/03-1-300x280.png)

Micromodels are also particularly valuable in situations where a concept is ambiguous or carries multiple meanings. The word “running”, for example, can be a verb, adjective or noun depending on the use case.

You might be running (v) late to pick up your running (adj) shoes so that you can go running (n). Similarly, the term “apple” can refer to either a fruit or a technology business depending on context.

Related: When Everything in AI is Unique, How do You Solve for It?

Training a machine learning macromodel to identify and correctly categorize each instance of “running” or “apple” is a monumental task. But it’s a breeze for a micromodel.

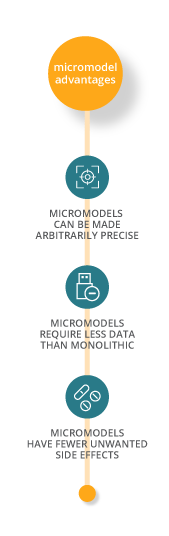

Benefits of Micromodels: More Precision, Less Data, Fewer Side-Effects

Because they’re focused so narrowly, micromodels require far less data than general models or comparable unsupervised methods to successfully do their job.

Because they only act when their subject is present, micromodels are highly precise. What’s more, this precision can be artificially “dialed up” by increasing the data used to train them on the specific term of their focus.

Think of it this way: Feeding a micromodel with examples of how the word “running” can be used will result in accurate, precise results far more quickly and easily than feeding a macromodel an entire language corpus of which the word “running” is only a small part.

Think of it this way: Feeding a micromodel with examples of how the word “running” can be used will result in accurate, precise results far more quickly and easily than feeding a macromodel an entire language corpus of which the word “running” is only a small part.

And because micromodels are kept separate from each other, the potential side-effects that can arise when training on large data sets won’t show up when working with micromodels.

A New Way Forward for Machine Learning and AI in Natural Language Processing and Beyond

Micromodels aren’t replacing our Tune First, then Train approach to text analytics and natural language processing. Instead, think of them as extending it into its 2.0 iteration.

Tuning remains a key first step, as it’s the most simple and efficient method for improving the accuracy of a system. With micromodels, we’re rethinking how we approach the training portion.

Our efforts have shown that building numerous micromodels is simpler and more efficient than building a large macromodel. Micromodels also reduce the challenge of sourcing and annotating large amounts of data.

Lexalytics is already using the micromodel approach to solve machine learning problems in natural language processing and text analytics. But our philosophy is broadly applicable.

Micromodels are a valuable way for any AI or machine learning company to reduce their workload and improve the accuracy of their systems.

A Closing Note on Micromodels

While our underlying technology allows us to easily build these models, we don’t claim any proprietary ownership over the concept of micromodels. Nor does building or implementing micromodels require any special technology.

Using micromodels simply involves building many small models rather than a few large models. This approach allows AI and machine learning companies to produce more accurate, more precise results with less data and time, and therefore, less cost.

Download our Tuning and Machine Learning Services data sheet. Or read our full white paper on Machine Learning for Natural Language Processing and Text Analytics:

Read the full white paper: Machine Learning for Natural Language Processing and Text Analytics