Both Forrester and Gartner say that Robotic Process Automation (RPA) is moving towards larger, transformational initiatives. As part of that shift, RPA vendors must improve their natural language processing (NLP) capabilities to support trending text analytics use cases. But larger automations require more customization from you, the RPA vendor. As companies automate larger and larger processes, the similarities between RPA deployments will diminish. Each of your customers may have different demands: on-premise or private cloud processing, specific tuning configurations, or even machine learning needs.

This leaves you with a choice: try to build new NLP capabilities yourself, or buy and integrate a text analytics system from an existing provider?

Lexalytics, an InMoment company, has 15+ years of experience in the NLP space. In that time, we’ve helped hundreds of companies work through this “Build or Buy” decision. In this article, we’ll examine your options as an RPA vendor looking to build out a new text analytics or NLP capability.

Option 1: Building New NLP Capabilities Using Open Source

First off, you could try building your own text analytics engine based on open source NLP libraries. You have dozens to choose from, including StanfordNLP, Apache NLP and NLTK. (Here’s a list of 12 open source NLP tools, organized by programming language.)

Open source NLP tools are free, flexible and are constantly under development. Indeed, the benefits of open source NLP are the benefits of all open source software: flexibility and agility, cost-effectiveness, and shared maintenance costs, to name a few.

But there are some major downsides to using open source in an RPA context: open source NLP libraries have scalability issues, have fewer or weaker NLP features, and generally support fewer languages. What’s more, open source is rarely deployable on-premise, making it a no-go for anyone operating within strict data handling requirements, such as firms operating under GDPR mandates.

And in the end, open source will always require a heavy technical lift from you, the RPA vendor. You’ll be responsible for finding a model, building and training it, and deploying it into production. And then you’ll be on the hook for re-training and re-deploying it over and over and over again.

Option 2: Building From an Off-the-Shelf Text Analytics API

Open source is great, but open source NLP for RPA is frequently a no-go. So, what about buying an off-the-shelf NLP toolkit from a vendor?

The text analytics/NLP API market is thriving. Search for “sentiment analysis API” on Google and you’ll get 6,380,000 results from Google, DeepAI, Aylien, Microsoft, and many others. A search for “text classification API” offers another 71,300,000 results. (Here’s a list of 33 more text analysis APIs from RapidAPI.)

But don’t get too excited. The fact is that most of these tools fail in the context of RPA. Why?

First, many off-the-shelf text analytics APIs can’t deploy on-premise. Some can, but most are “cloud processing or bust”. This automatically disqualifies most APIs for anyone dealing with strict data handling regulations.

Second, off-the-shelf tools tend to take a “least common denominator” or “one size fits most” approach to configuration and tuning. Why is this a problem? Imagine an off-the-shelf support ticket classifier that’s been trained on thousands, even millions of support tickets. The vendor might claim 90% accuracy rates. However, their models weren’t trained on your support tickets. Without the ability to configure and tune the system, your own data will inevitably cause the model to mis-categorize things.

Worse, this approach to training often means that off-the-shelf APIs can’t identify and extract all of the data involved in your process in the first place, let alone understand the deeper meaning and context underlying it.

Third, and on a related note: text processing models from inexperienced companies are fragile. Subtle differences between the data a model was trained on, and the data it’s fed in practice, can cause total system failure.

This creates big problems in an RPA context because the documents you handle often contain complex structures and inconsistent data. For example, contracts and invoices contain both structured elements (such as tables and sub-headers) and unstructured sections (such as free-standing text paragraphs, descriptive lists, or commentary on information within tables). Two documents may share a format, like an invoice or a contract template. But these documents rarely have standardized text sections. And the data within structured elements can differ wildly between documents.

To illustrate, let’s say you want to add NLP capabilities for automatic invoice processing. You can probably find an off-the-shelf NLP API to automatically extract data from invoices and then distribute across some databases. Trouble is, your invoices will always be slightly different than those the model was trained on. And those differences, no matter how slight, can cause off-the-shelf text analytics APIs to fail. An inexperienced NLP vendor won’t know how to handle this situation.

Option 3: Buying and Integrating a Complete, Customizable Text Analytics Solution from an Experienced NLP Company

So far, we’ve explored open source NLP libraries and off-the-shelf text analytics APIs. The former is free but requires a heavy technical lift. And open source often can’t meet your deployment or feature requirements. Off-the-shelf APIs don’t work for similar reasons, even though you pay for them. What’s left?

Try a customizable text analytics API from an established company. These systems offer the best of all worlds: fast integration and out-of-the-box results, on-premise and private cloud deployment options, and deep configurability.

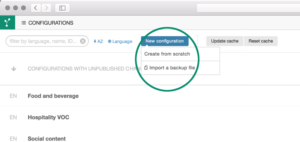

Lexalytics’ own Salience engine, for example, integrates seamlessly into your own RPA platform. In as little as 4 days, you can start offering new and better text analytics capabilities to your own customers. And from there, Salience enables you to meet market demands for customized processing by tuning and configuring every feature on a customer-by-customer basis.

Tuning and configuration are key to supporting deeper text analytics use cases. Without them, you might be able to provide moderately-reliable document classification or entity recognition for common companies and products. With them, however, you can offer your customers the ability to track thousands of specific products and brands, categorize customer reviews based on the sauces mentioned, and more.

To learn more about NLP configuration, read our Tuning & Machine Learning Services data sheet or visit the Tuning page in our support portal.

Some NLP companies even offer industry packs. These are pre-built configurations that improve accuracy and precision by 10-50% or more, with a fraction of the labor of traditional tuning. Think of them as “plug-and-play” tuning.

Of course, there are far fewer NLP vendors that meet all of these qualifications. But they’re out there, and they’re already solving text analytics use cases for RPA vendors like you.

In alphabetical order, here are some of the best partners you can find for adding text analytics and NLP capabilities into your RPA platform.

Top text analytics vendors for RPA:

Lexalytics (that’s us!)

What’s more, some of these companies that already have a wealth of experience training and deploying machine learning models in an NLP context.

Choosing an NLP partner with machine learning experience means you’ll be able to work with them to solve tricky data-related problems with targeted models much faster, and for less cost.

Summary: The Best Way to Add New NLP Capabilities to Your RPA Platform is to Integrate A Customizable Text Analytics System from an Experienced Provider

The future of RPA is customized automations that address broad, transformational use cases. Within that framework, text analytics and natural language processing capabilities can work in concert with other RPA bots to unlock new automation opportunities and reinforce existing systems. But trying to build text analytics into your RPA platform from scratch will be horrendously time-consuming and extremely expensive. And that’s just to get a very basic system up and running.

Instead, by integrating a mature text analytics and NLP solution from an established provider into your RPA platform, you can…

- deliver better analytics capabilities

- solve more and broader use cases

- differentiate in a growing market

…much faster, for far less cost, and with substantially lower risk.

Here’s how that process might look:

- Integrate a text analytics into your RPA platform

- Quickly solve trending text analytics use cases

- Build out new configurations and roll out deeper NLP features

- Offer new value by integrating text analytics into larger customer RPA initiatives

In fact, that exact outline is how one of our own customers is already solving a major shortcoming in their offerings. Curious what they’re doing, and how you can deploy a text analytics solution into your own RPA platform? Drop us a line.